Cisco UCS: Virtual Interface Cards & VM-FEX

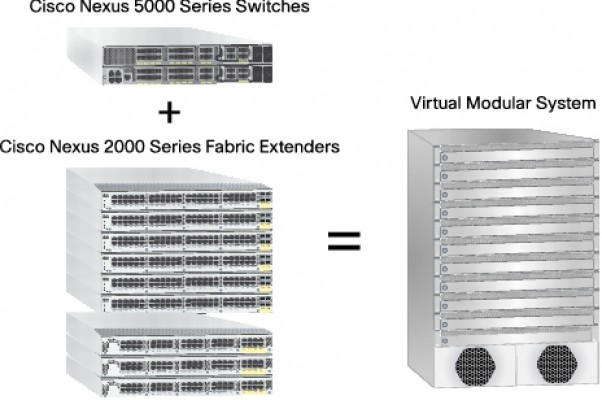

Hello once again! Today I decided to talk about some Cisco innovations around of UCS platform. I’m going to try my best to keep this post high-level and EASY to understand as most things “virtual” can get fairly complex. First up is Virtual Interface Card (VIC). This is Cisco’s answer to 1:1 mapped blade mezzanine cards in blade servers and other “virtual connectivity” mezzanine solutions. Instead of having a single MEZZ/NIC mapped to a specific internal/external switch/interconnect we developed a vNIC optimized for virtualized…