ConfigBytes: Nexus 6000/5600 Latency & Buffer Monitor

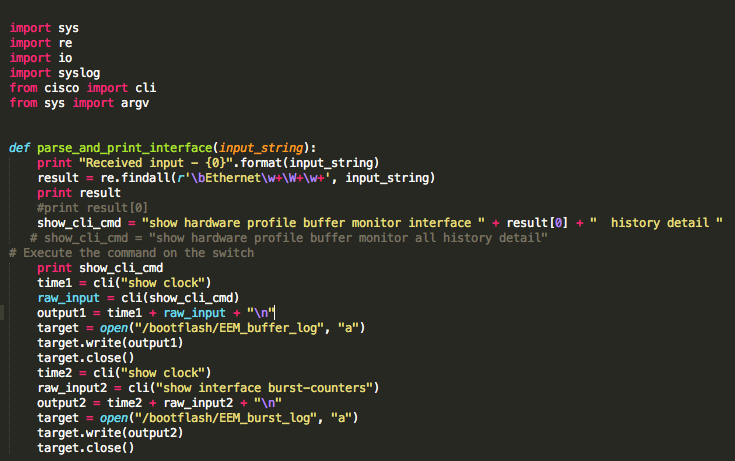

#CONFIGBYTES Episode 2 Platforms: Nexus 6000 & 5600 (UPC based ASIC) Latency Monitor: Full Documentation The switch latency monitoring feature marks each ingress and egress packet with a timestamp value. To calculate the latency for each packet in the system the switch compares the ingress with the egress timestamp. The feature allows you to display historical latency averages between all pairs of ports, as well as real-time latency data. You can use the latency measurements to identify which flows…